Road to sqlite-vec: Exploring SQLite as a RAG vector database

By David Wu (@bydavidwu) . Published on June 22, 2025.

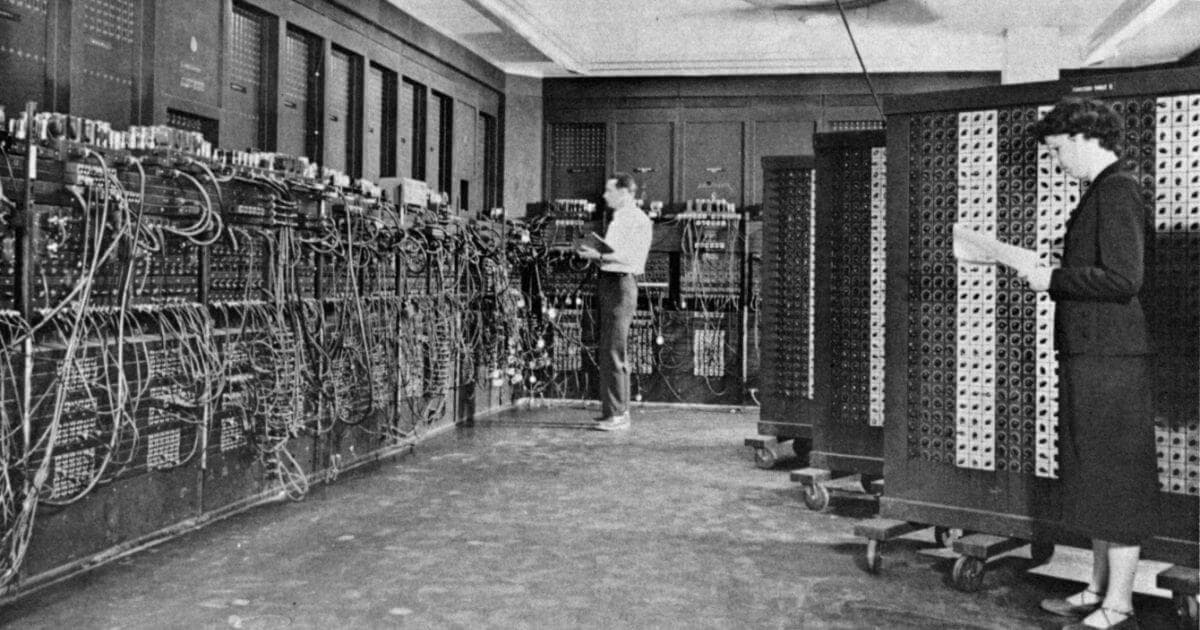

Computers (...and databases) have come a long way since ENIAC (1945), the first general-purpose electronic digital computer (Source: Wikimedia Commons).

Road to sqlite-vec

About a year ago in mid-2024, I decided to build a new side project, an AI PDF reader desktop app. I'd heard about RAG (retrieval augmented generation), so envisioned building a PDF reader with RAG-powered chat in a panel on the side (what eventually became Midswirl).

I had to make some choices.

What framework should I use for the desktop app itself?

As a web developer, Electron was the natural choice. If it's good enough to use to build VS Code, it's probably good enough for me.

What database should I use?

SQLite was the obvious choice, given I wanted the app to have access to an instance of the database locally and bundling it with an Electron app is a well-trodden path.

How should I implement the RAG and supporting AI logic?

I'd heard a lot about Langchain and after perusing the docs decided to go ahead with that.

What vector database should I use?

Uhh, I had no idea. My requirement was that I could bundle the vector database with my Electron app and store the vectors in a local instance.

At some point, I came across sqlite-vss, the precursor to sqlite-vec. Both of these open-source projects are created by the talented Alex Garcia (@agarcia_me) and are vanilla SQLite extensions that add vector support.

Given I'd already decided to use SQLite for the other database needs of my app, sqlite-vss looked appealing for the same reasons pgvector for Postgres is appealing to many: Having your vector data and your usual data in the same system and being able to query this data together in a familiar way makes for a great developer experience.

At that time, sqlite-vss was in the process of being rewritten as sqlite-vec, which would add (and has added) superior multi-platform support and other improvements.

So, I decided to wait for sqlite-vec. In the meantime, I had plenty to build and Langchain provided support for an in-memory vector database to get started with.

(Later, I also came across libSQL, the fork of SQLite with native vector support created by the talented folks over at Turso. Given I was already using SQLite in my app, I decided to stick with sqlite-vec.)

Exploring sqlite-vec

After six months of building, I had a working early iteration of my side project using Langchain's built-in in-memory vector database for the RAG part of the app. By this time around the start of this year, sqlite-vec had been released, so it was time to start exploring it with some simple example code in TypeScript courtesy of sqlite-vec's Node.js bindings.

(While written in C, there are bindings for Python, Node.js and other languages.)

To implement SQLite in my Electron app, I used better-sqlite3. I was pleasantly surprised that better-sqlite3 was so readily compatible with sqlite-vec. We can install both packages in a Node.js project using npm:

npm install better-sqlite3 sqlite-vec

After installing sqlite-vec, we can load it as follows:

import * as sqliteVec from "sqlite-vec"; import Database from "better-sqlite3"; const db = new Database(":memory:"); sqliteVec.load(db);

For the purposes of exploring sqlite-vec, it's enough to store the instance in memory instead of on disk. We can then use a simple query to check it's been loaded properly:

const selectVecLengthRes = db .prepare("select vec_length('[.1, .2]') as vec_length;") .get() as { vec_length: number }; console.log(`selectVecLengthRes.vec_length: ${selectVecLengthRes.vec_length}`); // selectVecLengthRes.vec_length: 2

Having done that, we can create a virtual table to store our vectors:

db.exec( ` CREATE VIRTUAL TABLE vec_documents USING vec0( document_id integer primary key, embedding float[4], category text ); `, );

Like the popular SQLite extension FTS for full-text search, sqlite-vec makes use of SQLite virtual tables.

In this simple example, the vec_documents table is storing vectors of dimension 4 and we have added a document_id column to use as a primary key and a category metadata column.

In practice, the vector dimensionality will be much higher. For example, OpenAI's embedding models text-embedding-ada-002 and text-embedding-3-small output vectors of dimensionality 1,536. The text-embedding-3-large model outputs even larger vectors of dimension 3,072.

Let's also create a standard SQLite table, documents, to put some data in:

db.exec( ` CREATE TABLE documents( id integer primary key autoincrement, content text ); `, );

We can then put some data into documents:

const documents = ["Apple", "Banana", "Cherry", "Dog", "Elephant", "Flamingo"]; const placeholders = documents.map(() => "(?)").join(", "); const flattenedDocuments = documents.flat(); db.prepare( ` INSERT INTO documents(content) VALUES ${placeholders}; `, ).run(flattenedDocuments);

Suppose now that we have used some embedding model to associate our documents with vectors and additionally have assigned the documents to categories. We can insert the data into vec_documents as follows:

const vectors = [ [1, [0.1, 0.1, 0.1, 0.1], "fruit"], [2, [0.2, 0.2, 0.2, 0.2], "fruit"], [3, [0.3, 0.3, 0.3, 0.3], "fruit"], [4, [0.4, 0.4, 0.4, 0.4], "animal"], [5, [0.5, 0.5, 0.5, 0.5], "animal"], [6, [0.6, 0.6, 0.6, 0.6], "animal"], ]; const insertVectorsStatement = db.prepare( "INSERT INTO vec_documents(document_id, embedding, category) VALUES (?, ?, ?);", ); const insertVectors = db.transaction((items) => { for (const [id, vector, category] of items) { insertVectorsStatement.run(BigInt(id), new Float32Array(vector), category); } }); insertVectors(vectors);

Given some query vector, we can then perform a simple cosine similarity search over data in both our documents and vec_documents tables, additionally filtering by some category:

const query = [0.7, 0.7, 0.7, 0.7]; const category = "fruit"; const closestThreeRowsByAscDistance = db .prepare( ` SELECT documents.content, vec_documents.document_id, vec_documents.distance, vec_documents.category FROM vec_documents INNER JOIN documents on documents.id = vec_documents.document_id WHERE embedding MATCH ? AND k = 3 AND category = ? ORDER BY distance; `, ) .all(new Float32Array(query), category); console.log("closestThreeRowsByAscDistance:", closestThreeRowsByAscDistance); // closestThreeRowsByAscDistance: [ // { // content: 'Cherry', // document_id: 3, // distance: 0.7999999523162842, // category: 'fruit' // }, // { content: 'Banana', document_id: 2, distance: 1, category: 'fruit' }, // { // content: 'Apple', // document_id: 1, // distance: 1.1999999284744263, // category: 'fruit' // } // ]

This to me is the magic of sqlite-vec. It integrates well with my existing SQLite tools. My vector and usual data is all there together. And as can be seen here, the query language I'm used to seamlessly extends to support vectors.

Impressed by sqlite-vec's capabilities, the next step was to integrate sqlite-vec into my existing RAG implementation, replacing the in-memory Langchain vector store with sqlite-vec.

In Langchain's modular approach, what I needed to do was swap out the built-in MemoryVectorStore class being used in my RAG logic with a custom class extending the core VectorStore class supporting my app's requirements of sqlite-vec.

Closing thoughts

sqlite-vec is a highly flexible vector database. It can be used on device, on the server via SQLite Cloud or Turso, and has bindings in many languages. Wherever you might be using SQLite, you can probably use sqlite-vec too.

At the same time, it piggybacks on the SQLite ecosystem and query language, making for a really good developer experience. For example, it can be used together with the SQLite extension FTS to implement hybrid search.

Personally, I've been very satisfied working with sqlite-vec and think you, the reader, will be as well.